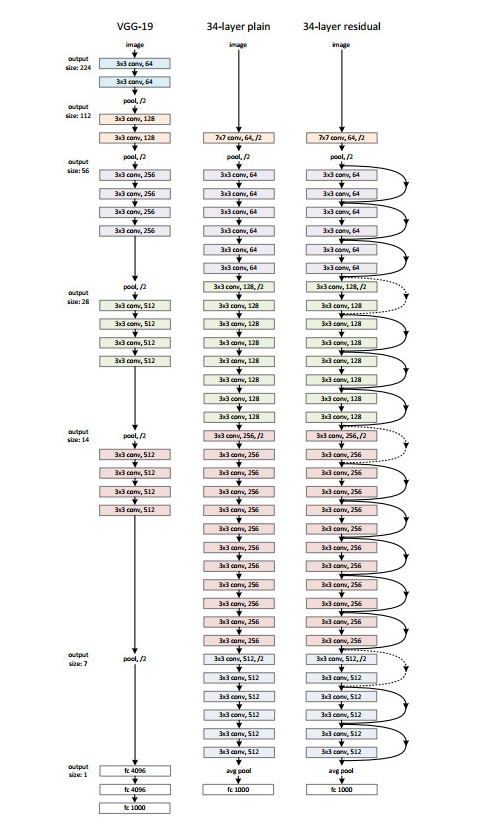

設計網路的規則:1.對於輸出feature map大小相同的層,有相同數量的filters,即channel數相同;2. 當feature map大小減半時(池化),filters數量翻倍。

import tensorflow as tf

from tensorflow import keras

# 按 He et al. (2016) 定義兩類殘差塊。

def identity_block(X, f, channels):

'''

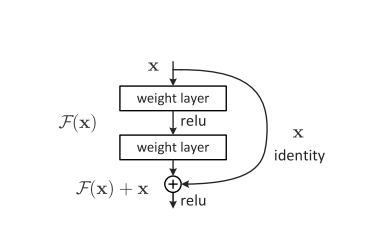

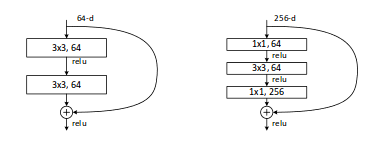

卷積塊--(等值函式)--卷積塊

'''

F1, F2, F3 = channels

X_shortcut = X

# 主通路

# 塊1

X = keras.layers.Conv2D(filters=F1, kernel_size=(1, 1), strides=(1,1), padding ='valid')(X)

X = keras.layers.BatchNormalization(axis=3)(X)

X = keras.layers.Activation('relu')(X)

# 塊 2

X = keras.layers.Conv2D(filters=F2, kernel_size=(f, f), strides=(1, 1), padding='same')(X)

X = keras.layers.BatchNormalization(axis=3)(X)

X = keras.layers.Activation('relu')(X)

# 塊 3

X = keras.layers.Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding='valid')(X)

X = keras.layers.BatchNormalization(axis=3)(X)

# 跳躍連線

X = keras.layers.Add()([X, X_shortcut])

X = keras.layers.Activation('relu')(X)

return X

def convolutional_block(X, f, channels, s=2):

'''

卷積塊--(卷積塊)--卷積塊

'''

F1, F2, F3 = channels

X_shortcut = X

# 主通路

# 塊1

X = keras.layers.Conv2D(filters=F1, kernel_size=(1, 1), strides=(s, s), padding='valid')(X)

X = keras.layers.BatchNormalization(axis=3)(X)

X = keras.layers.Activation('relu')(X)

# 塊2

X = keras.layers.Conv2D(filters=F2, kernel_size=(f, f), strides=(1, 1), padding='same')(X)

X = keras.layers.BatchNormalization(axis=3)(X)

X = keras.layers.Activation('relu')(X)

# 塊3

X = keras.layers.Conv2D(filters=F3, kernel_size=(1, 1), strides=(1, 1), padding='valid')(X)

X = keras.layers.BatchNormalization(axis=3)(X)

# 跳躍連線

X_shortcut = keras.layers.Conv2D(filters=F3, kernel_size=(1, 1),

strides=(s, s), padding='valid')(X_shortcut)

X_shortcut = keras.layers.BatchNormalization(axis=3)(X_shortcut)

X = keras.layers.Add()([X, X_shortcut])

X = keras.layers.Activation('relu')(X)

return X

# ===== ResNet-50 ===== #

IN_GRID = keras.layers.Input(shape=(256, 256, 3)) # 輸入256x256的RGB圖像

# 0填充

X = keras.layers.ZeroPadding2D((3, 3))(IN_GRID)

# 主通路

X = keras.layers.Conv2D(64, (7, 7), strides = (2, 2))(X)

X = keras.layers.BatchNormalization(axis=3)(X)

X = keras.layers.Activation('relu')(X)

X = keras.layers.MaxPooling2D((3, 3), strides=(2, 2))(X)

# 殘差塊1

X = convolutional_block(X, 3, [64, 64, 256])

X = identity_block(X, 3, [64, 64, 256])

X = identity_block(X, 3, [64, 64, 256])

# 殘差塊2

X = convolutional_block(X, 3, [128, 128, 512])

X = identity_block(X, 3, [128, 128, 512])

X = identity_block(X, 3, [128, 128, 512])

X = identity_block(X, 3, [128, 128, 512])

# 殘差塊3

X = convolutional_block(X, 3, [256, 256, 1024], s=2)

X = identity_block(X, 3, [256, 256, 1024])

X = identity_block(X, 3, [256, 256, 1024])

X = identity_block(X, 3, [256, 256, 1024])

X = identity_block(X, 3, [256, 256, 1024])

X = identity_block(X, 3, [256, 256, 1024])

# 殘差塊4

X = convolutional_block(X, 3, [512, 512, 2048])

X = identity_block(X, 3, [512, 512, 2048])

X = identity_block(X, 3, [512, 512, 2048])

# 全局均值池化

X = keras.layers.AveragePooling2D(pool_size=(1,1), padding='same')(X)

X = keras.layers.Flatten()(X)

# 輸出分類(按ImageNet為1000個分類)

OUT = keras.layers.Dense(1000, activation='softmax')(X)

# Create model

ResNet50 = keras.models.Model(inputs=IN_GRID, outputs=OUT)

# 編譯模型

opt = keras.optimizers.Adam(lr=0.001, decay=0.0)

ResNet50.compile(loss=keras.losses.categorical_crossentropy, optimizer=opt, metrics=['accuracy'])

# 輸出模型結構

keras.utils.plot_model(ResNet50, show_shapes=True, show_layer_names=False)

#

ResNet50.fit_generator(...) # 訓練模型